Basics of crawling

Learn how to crawl the web with your scraper. How to extract links and URLs from web pages and how to manage the collected links to visit new pages.

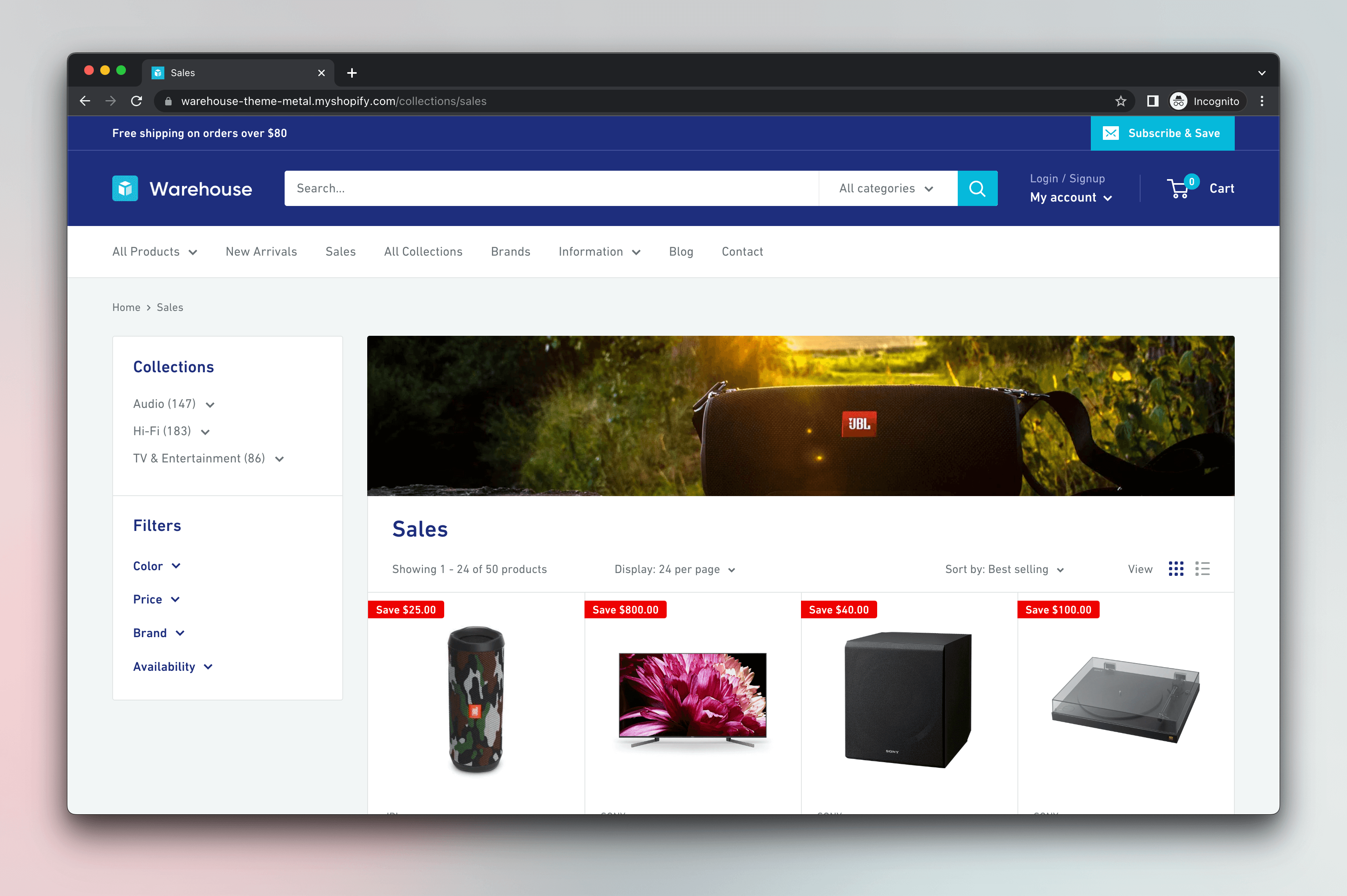

Welcome to the second section of our Web scraping basics for JavaScript devs course. In the Basics of data extraction section, we learned how to extract data from a web page. Specifically, a template Shopify site called Warehouse store.

In this section, we will take a look at moving between web pages, which we call crawling. We will extract data about all the on-sale products on Warehouse store. To do that, we will need to crawl the individual product pages.

How do you crawl?

Crawling websites is a fairly straightforward process. We'll start by opening the first web page and extracting all the links (URLs) that lead to the other pages we want to visit. To do that, we'll use the skills learned in the Basics of data extraction course. We'll add some extra filtering to make sure we only get the correct URLs. Then, we'll save those URLs, so in case our scraper crashes with an error, we won't have to extract them again. And, finally, we will visit those URLs one by one.

At any point, we can extract URLs, data, or both. Crawling can be separate from data extraction, but it's not a requirement and, in most projects, it's actually easier and faster to do both at the same time. To summarize, it goes like this:

- Visit the start URL.

- Extract new URLs (and data) and save them.

- Visit one of the new-found URLs and save data and/or more URLs from them.

- Repeat 2 and 3 until you have everything you need.

Next up

First, let's make sure we all understand the foundations. In the next lesson we will review the scraper code we already have from the Basics of data extraction section of the course.