Scraping a list of URLs from a Google Sheets document

You can export URLs from Google Sheets such as this one directly into an Actor's Start URLs field.

-

Make sure the spreadsheet has one sheet and a simple structure to help the Actor find the URLs.

-

Add the

/gviz/tq?tqx=out:csvquery parameter to the Google Sheet URL base, right after the long document identifier part. For example, https://docs.google.com/spreadsheets/d/1-2mUcRAiBbCTVA5KcpFdEYWflLMLp9DDU3iJutvES4w/gviz/tq?tqx=out:csv. This automatically exports the spreadsheet to CSV format. -

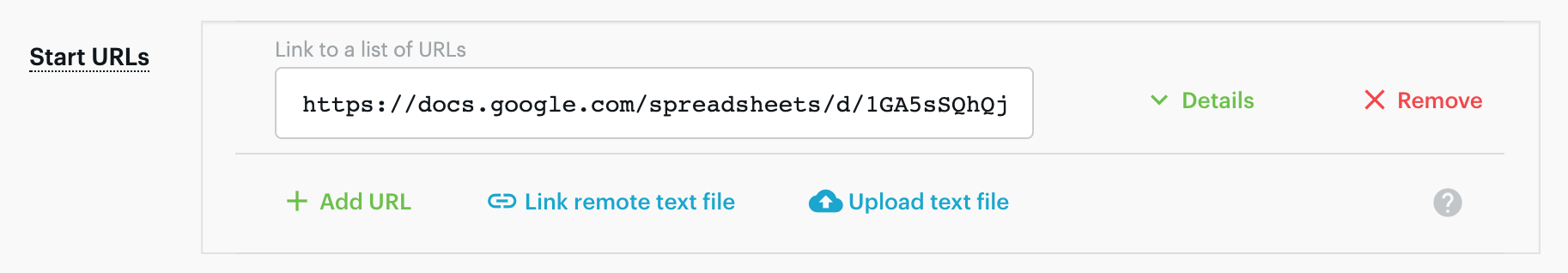

In the Actor's input, click Link remote text file and paste the URL there:

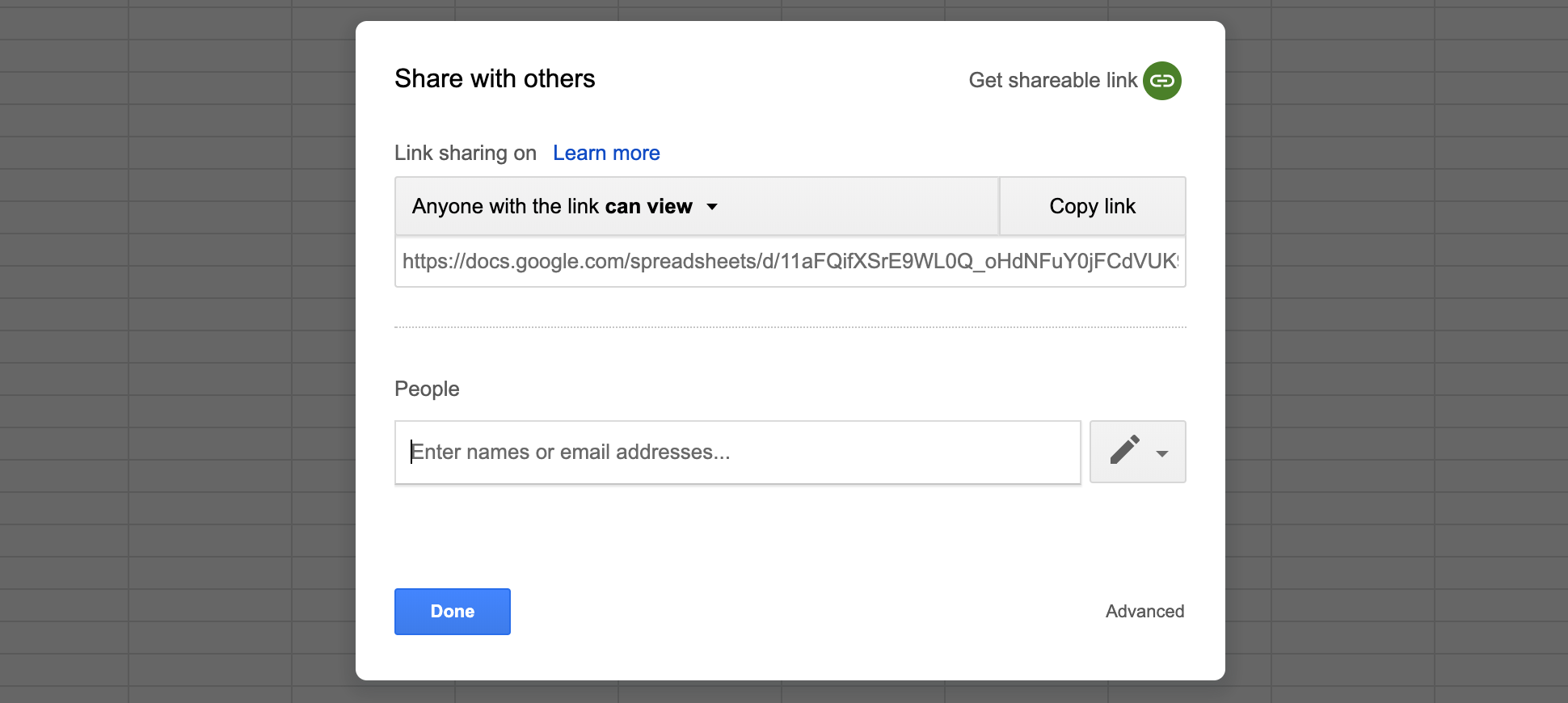

IMPORTANT: Make sure anyone with the link can view the document. Otherwise, the Actor will not be able to access it.