Qdrant integration

Learn how to integrate Apify with Qdrant to transfer crawled data into the Qdrant vector database.

Qdrant is a high performance managed vector database that allows users to store and query dense vectors for next generation AI applications such as recommendation systems, semantic search, and retrieval augmented generation (RAG).

The Apify integration for Qdrant enables you to export results from Apify Actors and Dataset items into a specific Qdrant collection.

Prerequisites

Before you begin, ensure that you have the following:

- A Qdrant cluster set up.

- A Qdrant URL to the database and Qdrant API token.

- An OpenAI API key to compute text embeddings.

- An Apify API token to access Apify Actors.

How to setup Qdrant database and create a cluster

-

Sign up or log in to your Qdrant account and create a new cluster.

-

Specify the following details: provider, region, and name.

-

Set up an API key for the cluster once it is created and its status is healthy.

With the cluster ready and its URL and API key in hand, you can proceed with integrating Apify.

Integration Methods

You can integrate Apify with Qdrant using either the Apify Console or the Apify Python SDK.

The examples utilize the Website Content Crawler Actor, which deeply crawls websites, cleans HTML by removing modals and navigation elements, and converts HTML to Markdown for training AI models or providing web content to LLMs and generative AI applications.

Apify Console

-

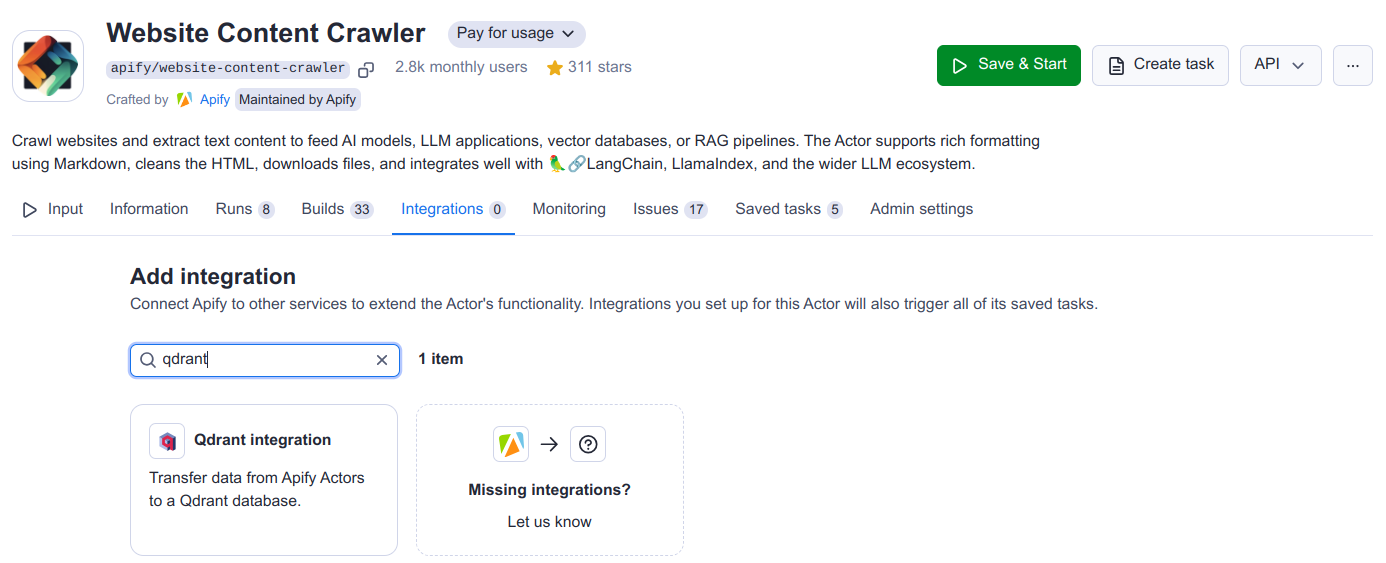

Set up the Website Content Crawler Actor in the Apify Console. Refer to this guide on how to set up website content crawl for your project.

-

Once you have the crawler ready, navigate to the integration section and add Apify's Qdrant integration.

-

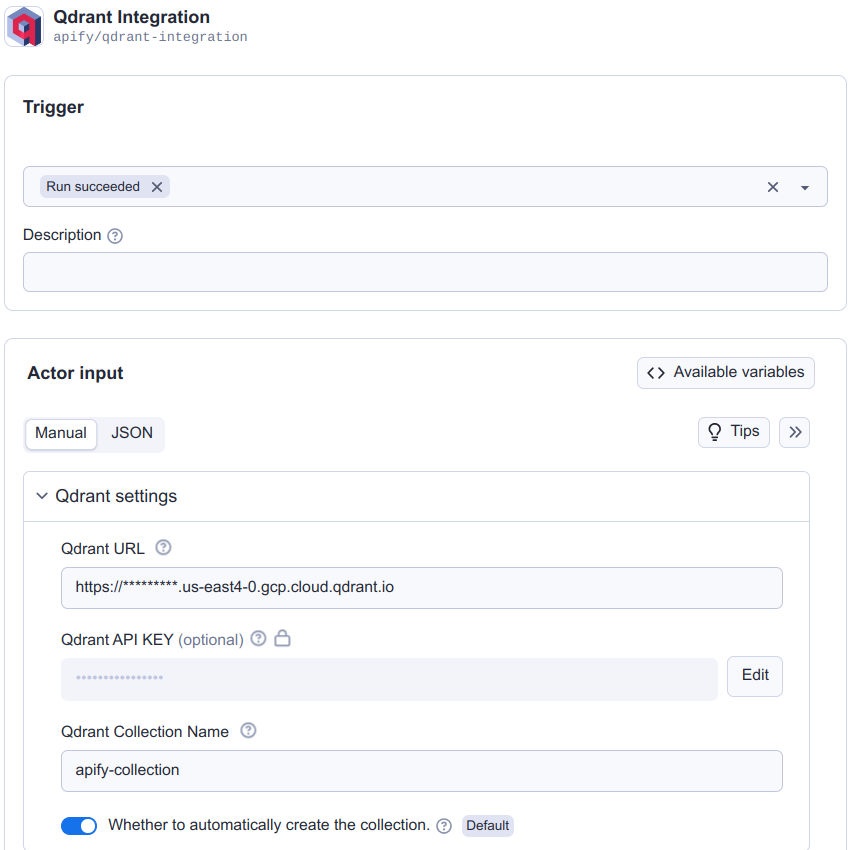

Select when to trigger this integration (typically when a run succeeds) and fill in all the required fields for the Qdrant integration. If you haven't created a collection, it can be created automatically with the specified model. You can learn more about the input parameters at the Qdrant integration input schema.

-

For a detailed explanation of the input parameters, including dataset settings, incremental updates, and examples, see the Qdrant integration description.

-

For an explanation on how to combine Actors to accomplish more complex tasks, refer to the guide on Actor-to-Actor integrations.

Python

Another way to interact with Qdrant is through the Apify Python SDK.

-

Install the Apify Python SDK by running the following command:

pip install apify-client -

Create a Python script and import all the necessary modules:

from apify_client import ApifyClient

APIFY_API_TOKEN = "YOUR-APIFY-TOKEN"

OPENAI_API_KEY = "YOUR-OPENAI-API-KEY"

QDRANT_URL = "YOUR-QDRANT-URL"

QDRANT_API_KEY = "YOUR-QDRANT-API-KEY"

QDRANT_COLLECTION_NAME = "YOUR-QDRANT-COLLECTION-NAME"

client = ApifyClient(APIFY_API_TOKEN) -

Call the Website Content Crawler Actor to crawl the Qdrant documentation and extract text content from the web pages:

actor_call = client.actor("apify/website-content-crawler").call(

run_input={"startUrls": [{"url": "https://qdrant.tech/documentation/"}]}

) -

Call Apify's Qdrant integration and store all data in the Qdrant Vector Database:

qdrant_integration_inputs = {

"qdrantUrl": QDRANT_URL,

"qdrantApiKey": QDRANT_API_KEY,

"qdrantCollectionName": QDRANT_COLLECTION_NAME,

"qdrantAutoCreateCollection": True,

"datasetId": actor_call["defaultDatasetId"],

"datasetFields": ["text"],

"enableDeltaUpdates": True,

"deltaUpdatesPrimaryDatasetFields": ["url"],

"deleteExpiredObjects": True,

"expiredObjectDeletionPeriodDays": 30,

"embeddingsProvider": "OpenAI",

"embeddingsApiKey": OPENAI_API_KEY,

"performChunking": True,

"chunkSize": 1000,

"chunkOverlap": 0,

}

actor_call = client.actor("apify/qdrant-integration").call(run_input=qdrant_integration_inputs)

You have successfully integrated Apify with Qdrant and the data is now stored in the Qdrant vector database.