Locating HTML elements with Python

In this lesson we'll locate product data in the downloaded HTML. We'll use BeautifulSoup to find those HTML elements which contain details about each product, such as title or price.

In the previous lesson we've managed to print text of the page's main heading or count how many products are in the listing. Let's combine those two. What happens if we print .text for each product card?

import httpx

from bs4 import BeautifulSoup

url = "https://warehouse-theme-metal.myshopify.com/collections/sales"

response = httpx.get(url)

response.raise_for_status()

html_code = response.text

soup = BeautifulSoup(html_code, "html.parser")

for product in soup.select(".product-item"):

print(product.text)

Well, it definitely prints something…

$ python main.py

Save $25.00

JBL

JBL Flip 4 Waterproof Portable Bluetooth Speaker

Black

+7

Blue

+6

Grey

...

To get details about each product in a structured way, we'll need a different approach.

Locating child elements

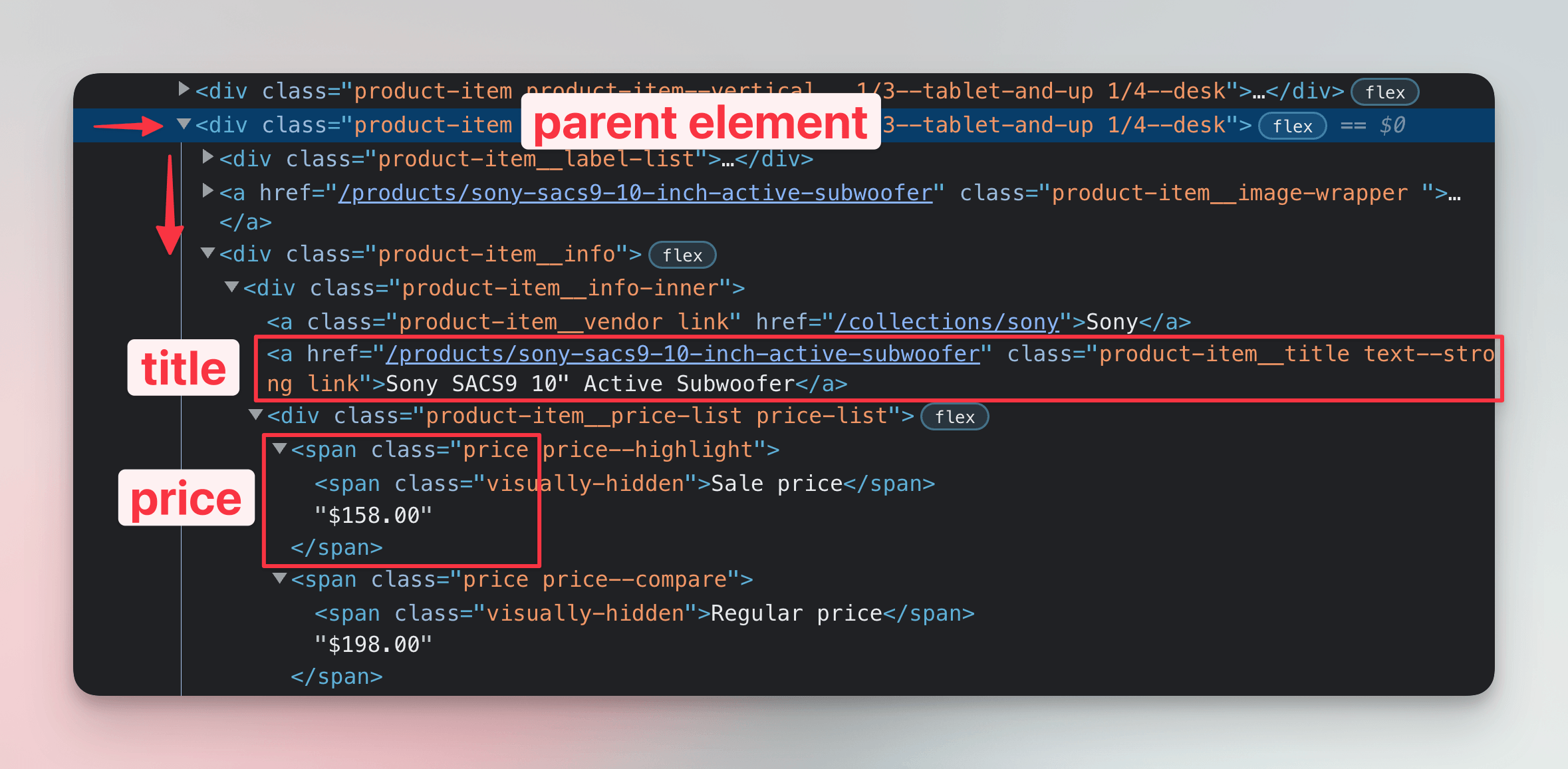

As in the browser DevTools lessons, we need to change the code so that it locates child elements for each product card.

We should be looking for elements which have the product-item__title and price classes. We already know how that translates to CSS selectors:

import httpx

from bs4 import BeautifulSoup

url = "https://warehouse-theme-metal.myshopify.com/collections/sales"

response = httpx.get(url)

response.raise_for_status()

html_code = response.text

soup = BeautifulSoup(html_code, "html.parser")

for product in soup.select(".product-item"):

titles = product.select(".product-item__title")

first_title = titles[0].text

prices = product.select(".price")

first_price = prices[0].text

print(first_title, first_price)

Let's run the program now:

$ python main.py

JBL Flip 4 Waterproof Portable Bluetooth Speaker

Sale price$74.95

Sony XBR-950G BRAVIA 4K HDR Ultra HD TV

Sale priceFrom $1,398.00

...

There's still some room for improvement, but it's already much better!

Locating a single element

Often, we want to assume in our code that a certain element exists only once. It's a bit tedious to work with lists when you know you're looking for a single element. For this purpose, Beautiful Soup offers the .select_one() method. Like document.querySelector() in browser DevTools, it returns just one result or None. Let's simplify our code!

import httpx

from bs4 import BeautifulSoup

url = "https://warehouse-theme-metal.myshopify.com/collections/sales"

response = httpx.get(url)

response.raise_for_status()

html_code = response.text

soup = BeautifulSoup(html_code, "html.parser")

for product in soup.select(".product-item"):

title = product.select_one(".product-item__title").text

price = product.select_one(".price").text

print(title, price)

This program does the same as the one we already had, but its code is more concise.

We assume that the selectors we pass to the select() or select_one() methods return at least one element. If they don't, calling [0] on an empty list or .text on None would crash the program. If you perform type checking on your Python program, the code examples above will trigger warnings about this.

Not handling these cases allows us to keep the code examples more succinct. Additionally, if we expect the selectors to return elements but they suddenly don't, it usually means the website has changed since we wrote our scraper. Letting the program crash in such cases is a valid way to notify ourselves that we need to fix it.

Precisely locating price

In the output we can see that the price isn't located precisely:

JBL Flip 4 Waterproof Portable Bluetooth Speaker

Sale price$74.95

Sony XBR-950G BRAVIA 4K HDR Ultra HD TV

Sale priceFrom $1,398.00

...

For each product, our scraper also prints the text Sale price. Let's look at the HTML structure again. Each bit containing the price looks like this:

<span class="price">

<span class="visually-hidden">Sale price</span>

$74.95

</span>

When translated to a tree of Python objects, the element with class price will contain several nodes:

- Textual node with white space,

- a

spanHTML element, - a textual node representing the actual amount and possibly also white space.

We can use Beautiful Soup's .contents property to access individual nodes. It returns a list of nodes like this:

["\n", <span class="visually-hidden">Sale price</span>, "$74.95"]

It seems like we can read the last element to get the actual amount. Let's fix our program:

import httpx

from bs4 import BeautifulSoup

url = "https://warehouse-theme-metal.myshopify.com/collections/sales"

response = httpx.get(url)

response.raise_for_status()

html_code = response.text

soup = BeautifulSoup(html_code, "html.parser")

for product in soup.select(".product-item"):

title = product.select_one(".product-item__title").text

price = product.select_one(".price").contents[-1]

print(title, price)

If we run the scraper now, it should print prices as only amounts:

$ python main.py

JBL Flip 4 Waterproof Portable Bluetooth Speaker $74.95

Sony XBR-950G BRAVIA 4K HDR Ultra HD TV From $1,398.00

...

Formatting output

The results seem to be correct, but they're hard to verify because the prices visually blend with the titles. Let's set a different separator for the print() function:

print(title, price, sep=" | ")

The output is much nicer this way:

$ python main.py

JBL Flip 4 Waterproof Portable Bluetooth Speaker | $74.95

Sony XBR-950G BRAVIA 4K HDR Ultra HD TV | From $1,398.00

...

Great! We have managed to use CSS selectors and walk the HTML tree to get a list of product titles and prices. But wait a second—what's From $1,398.00? One does not simply scrape a price! We'll need to clean that. But that's a job for the next lesson, which is about extracting data.

Exercises

These challenges are here to help you test what you’ve learned in this lesson. Try to resist the urge to peek at the solutions right away. Remember, the best learning happens when you dive in and do it yourself!

You're about to touch the real web, which is practical and exciting! But websites change, so some exercises might break. If you run into any issues, please leave a comment below or file a GitHub Issue.

Scrape list of International Maritime Organization members

Download International Maritime Organization's page with the list of members, use Beautiful Soup to parse it, and print names of all the members mentioned in all tables (including Associate Members). This is the URL:

https://www.imo.org/en/ourwork/ero/pages/memberstates.aspx

Your program should print the following:

Albania

Libya

Algeria

Lithuania

...

Liberia

Zimbabwe

Faroes

Hong Kong, China

Macao, China

Solution

import httpx

from bs4 import BeautifulSoup

url = "https://www.imo.org/en/ourwork/ero/pages/memberstates.aspx"

response = httpx.get(url)

response.raise_for_status()

soup = BeautifulSoup(response.text, "html.parser")

for table in soup.select(".content table"):

for row in table.select("tr"):

if cells := row.select("td"):

first_column = cells[0]

if text := first_column.text.strip():

print(text)

if len(cells) > 2:

third_column = cells[2]

if text := third_column.text.strip():

print(text)

We visit each row and if we find some table data cells, we take the text of the first and third ones. We print it if it's not empty. This approach skips table headers and empty rows.

Use CSS selectors to their max

Simplify your International Maritime Organization scraper from the previous exercise. Use just one for loop with a single CSS selector that targets all relevant table cells.

You may want to check out the following pages:

Solution

import httpx

from bs4 import BeautifulSoup

url = "https://www.imo.org/en/ourwork/ero/pages/memberstates.aspx"

response = httpx.get(url)

response.raise_for_status()

soup = BeautifulSoup(response.text, "html.parser")

for cell in soup.select(".content table tr td:nth-child(odd)"):

if name := cell.text.strip():

print(name)

Scrape F1 news

Download Guardian's page with the latest F1 news, use Beautiful Soup to parse it, and print titles of all the listed articles. This is the URL:

https://www.theguardian.com/sport/formulaone

Your program should print something like the following:

Wolff confident Mercedes are heading to front of grid after Canada improvement

Frustrated Lando Norris blames McLaren team for missed chance

Max Verstappen wins Canadian Grand Prix: F1 – as it happened

...

Solution

import httpx

from bs4 import BeautifulSoup

url = "https://www.theguardian.com/sport/formulaone"

response = httpx.get(url)

response.raise_for_status()

soup = BeautifulSoup(response.text, "html.parser")

for title in soup.select("#maincontent ul li h3"):

print(title.text)